FlExible assembLy manufacturIng with human-robot Collaboration and digital twin modEls (FELICE)

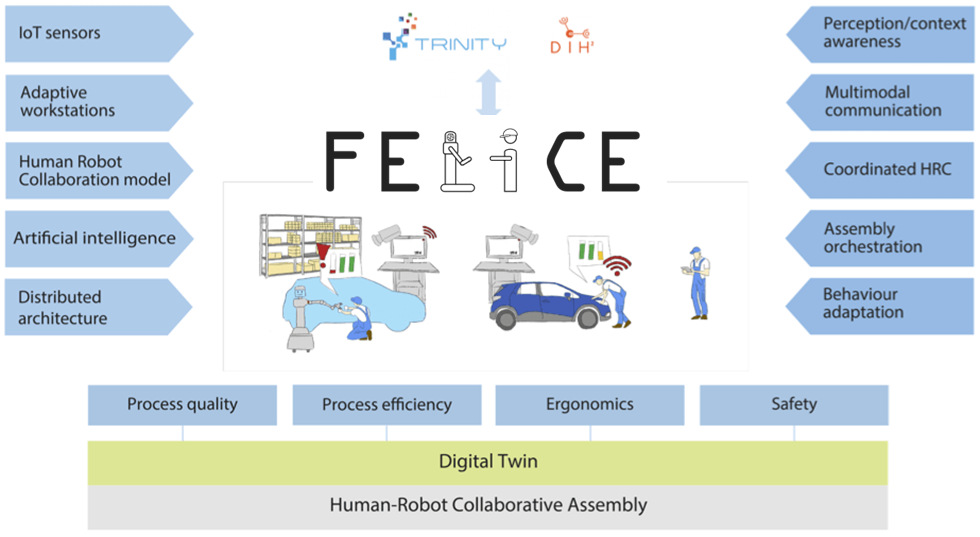

FELICE addresses one of the greatest challenges in robotics, i.e. that of coordinated interaction and combination of human and robot skills. The proposal targets the application priority area of agile production and aspires to design the next generation assembly processes required to effectively address current and pressing needs in manufacturing. To this end, it envisages adaptive workspaces and a cognitive robot collaborating with workers in assembly lines. FELICE unites multidisciplinary research in collaborative robotics, AI, computer vision, IoT, machine learning, data analytics, cyber-physical systems, process optimization and ergonomics to deliver a modular platform that integrates and harmonizes an array of autonomous and cognitive technologies in order to increase the agility and productivity of a manual assembly production system, ensure the safety and improve the physical and mental well-being of factory workers. The key to achieve these goals is to develop technologies that will combine the accuracy and endurance of robots with the cognitive ability and flexibility of humans. Being inherently more adaptive and configurable, such technologies will support future manufacturing assembly floors to become agile, allowing them to respond in a timely manner to customer needs and market changes.

FELICE framework comprises of two layers:

- A local one introducing a single collaborative assembly robot that will roam the shop floor assisting workers

- Adaptive workstations able to automatically adjust to the workers’ somatometries and providing multimodal informative guidance and notifications on assembly tasks, and a global layer which will sense and operate upon the real world via an actionable digital replica of the entire physical assembly line.

Related developments will proceed along the following directions:

- Implementing perception and cognition capabilities based on many heterogeneous sensors in the shop floor, which will allow the system to build context-awareness

- Advancing human-robot collaboration, enabling robots to operate safely and ergonomically alongside humans, sharing and reallocating tasks between them, allowing the reconfiguration of an assembly production process in an efficient and flexible manner

- Realizing a manufacturing digital twin, i.e. a virtual representation tightly coupled with production assets and the actual assembly process, enabling the management of operating conditions, the simulation of the assembly process and the optimization of various aspects of its performance.

FELICE foresees two environments for experimentation, validation, and demonstration. The first is a small-scale prototyping environment aimed to validate technologies before they are applied in a larger setting, provided by the second, industrial environment of one of the largest automotive industries in Europe. It is the view of the consortium that this quest is timely reacting to international competition, trends, and progress, pursuing results that are visionary and far beyond the current state of the art.

.

Project name:

FELICE – Flexible Assembly Manufacturing with Human-Robot Collaboration and Digital Twin Models

Funding:

H2020-EU.2.1.1. (EU Grant ID number: 101017151)

Total Budget:

€ 6 342 975

Duration:

01.01.2021– 30.06.2024

Coordinator:

INSTITUTE OF COMMUNICATION AND COMPUTER SYSTEMS, Greece

Partners:

PROFACTOR GMBH, Austria

CENTRO RICERCHE FIAT SCPA, Italy

FH OO FORSCHUNGS & ENTWICKLUNGS GMBH, Austria

AEGIS IT RESEARCH GMBH, Germany

FORSCHUNGSGESELLSCHAFT FUR ARBEITSPHYSIOLOGIE UND ARBEITSSCHUTZ E.V., Germany

IDRYMA TECHNOLOGIAS KAI EREVNAS, Greece

CAL-TEK SRL, Italy

TECHNISCHE UNIVERSITAT DARMSTADT, Germany

UNIVERSITA DEGLI STUDI DI SALERNO, Italy

FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG E.V., Germany

STANCZYK BARTLOMIEJ, Poland

EUNOMIA LIMITED, Ireland

We answer…

More details: https://www.felice-project.eu/